Responsible KI

Responsible KI

What are we working on?

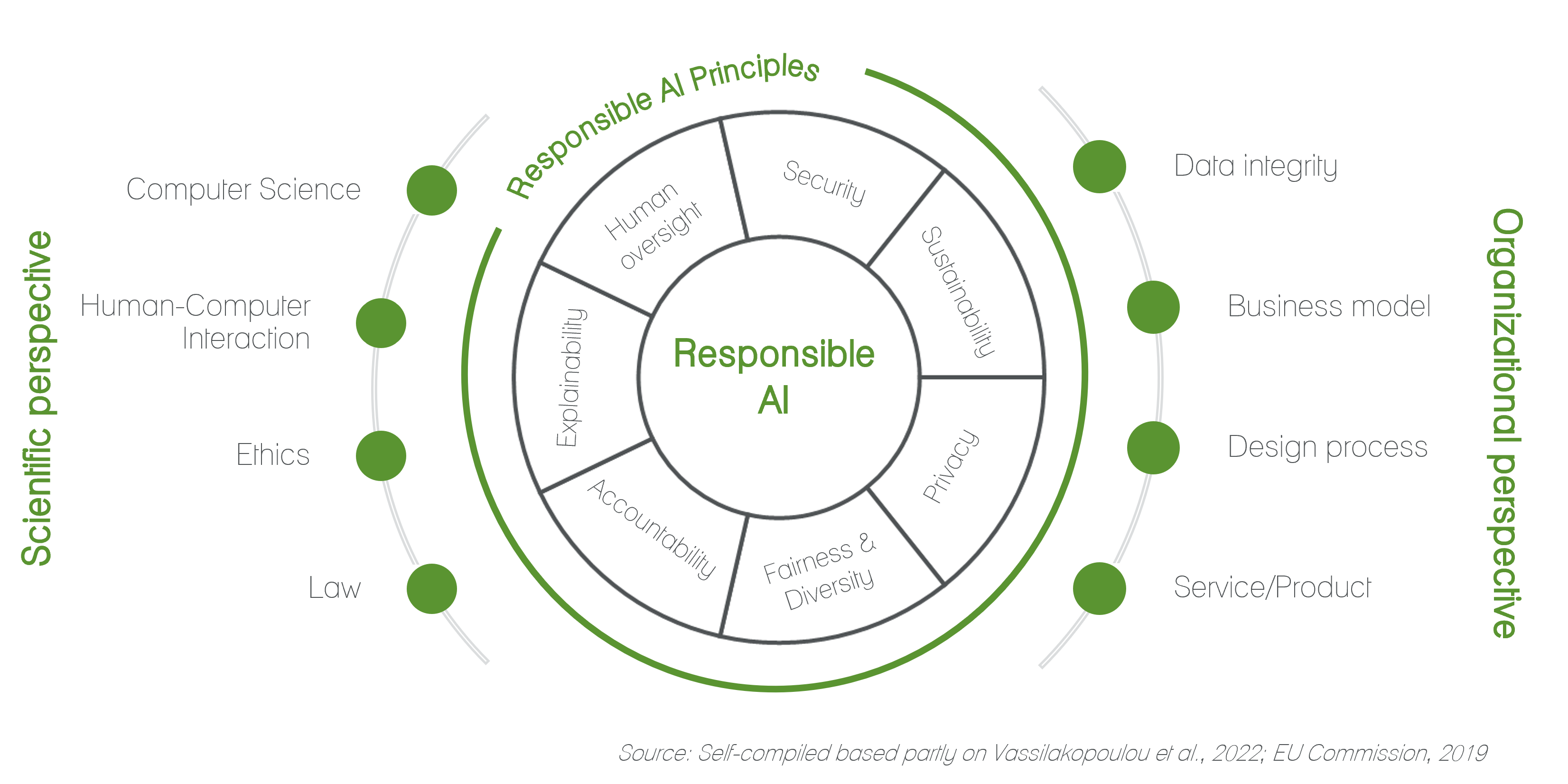

Responsible AI (RAI) involves developing ethical, transparent, accountable, and fair AI systems. The EU Commission outlined principles for RAI in 2019, emphasizing human oversight, safety, privacy, diversity, fairness, accountability, transparency, and societal impact.

RAI research spans four main areas:

- Computer Science: Ensures AI fairness and reliability.

- Human-Computer Interaction: Focuses on AI that enhances human capabilities.

- Philosophy & Ethics: Advocates for AI’s alignment with human values.

- Law: Addresses legal aspects of AI, influenced by the EU AI Act, exploring compliance and implications of AI within legal frameworks.

From an organizational perspective, RAI integrates into the entire AI lifecycle, emphasizing data integrity, ethical business models, collaborative design processes, and continuous monitoring to ensure AI systems are beneficial and safe.

Our research strategy on Responsible AI is positioned at the intersection of selected domains from both scientific and organizational perspectives. Scientifically, we focus on the technical trustworthiness of AI within Computer Science. We strive for algorithms that are equitable and reliable, and we also focus on Human-Computer Interaction to ensure that AI systems are user-centric and can augment human decision-making, instead of replacing it. Concerning Ethics, we emphasize the significance of ethical frameworks in the practical deployment of AI, especially in healthcare. Organizationally, we prioritize data integrity and the ethical design process. We ensure that AI systems are developed with unbiased and representative data, and with moral considerations at each design stage.

In conclusion, our work on RAI represents a collaborative synthesis of the selected scientific and organizational elements. We aim to create AI systems that are not just technologically advanced, but also socially responsible and ethically conscious.

Exemplary Applications

- Healthcare:

- Ensuring equity in patient care by designing AI tools that support diagnosis and treatment plans that counteract existing biases in healthcare delivery.

- Prevention and treatment of disease, cost reduction, patient autonomy and freedom, equal treatment via digital twin technology.

- Education:

- Developing AI tutoring and teaching tools that adapt to the individual learning style without discrimination.

- Developing AI tutoring and teaching tools that adapt to the individual learning style without discrimination. Promoting the well-being of teachers by saving time for manual tasks and making sure they can focus more on teaching and guiding the students.

- Media:

- Responsible moderation of content in social media channels, balancing between the right to freedom-of-speech and avoiding inflicting harm.

- Responsible AI practices for adaptive streaming, content adaptation and multi-device adaptation.

Principal Investigator

Dr.

Pavlina Kröckel

Post-Doc and Habilitation Candidate at FAU Erlangen-Nürnberg in Nuremberg, Germany

Completed projects

Text hier einfügen